At Aryma Labs, we increasingly leverage information theoretic methods over correlational ones. ICYMI, link to the article on the same is in resources.

To set the background, let me explain what is AIC and KL Divergence

𝐀𝐤𝐚𝐢𝐤𝐞 𝐢𝐧𝐟𝐨𝐫𝐦𝐚𝐭𝐢𝐨𝐧 𝐜𝐫𝐢𝐭𝐞𝐫𝐢𝐨𝐧 (𝐀𝐈𝐂)

AIC = 2k -2ln(L)

where

k is the number of parameters

L is the likelihood

The underlying principle behind usage of AIC is the ‘Information Theory’.

Coming back to AIC, In the above equation we have the likelihood. We try to maximize the likelihood.

It turns out that, maximizing the likelihood is equivalent of minimizing the KL Divergence.

𝐖𝐡𝐚𝐭 𝐢𝐬 𝐊𝐋 𝐃𝐢𝐯𝐞𝐫𝐠𝐞𝐧𝐜𝐞?

From an information theory point of view, KL divergence tells us how much information we lost due to our approximating of a probability distribution with respect to the true probability distribution.

𝐖𝐡𝐲 𝐰𝐞 𝐜𝐡𝐨𝐨𝐬𝐞 𝐦𝐨𝐝𝐞𝐥𝐬 𝐰𝐢𝐭𝐡 𝐥𝐨𝐰𝐞𝐬𝐭 𝐀𝐈𝐂

When comparing models, we choose the models with lowest AIC because in turn it means that the KL divergence also would be minimum. Low AIC score means little information loss.

Now you know how KL divergence an AIC are related and why we choose models with low AIC score.

𝐂𝐚𝐮𝐭𝐢𝐨𝐧 𝐚𝐛𝐨𝐮𝐭 𝐀𝐈𝐂

One of the misconceptions about AIC is that the AIC helps in choosing the best model out of a given set of models.

However, the key word here is ‘Relative’. AIC helps in choosing the ‘best model’ relative to other models.

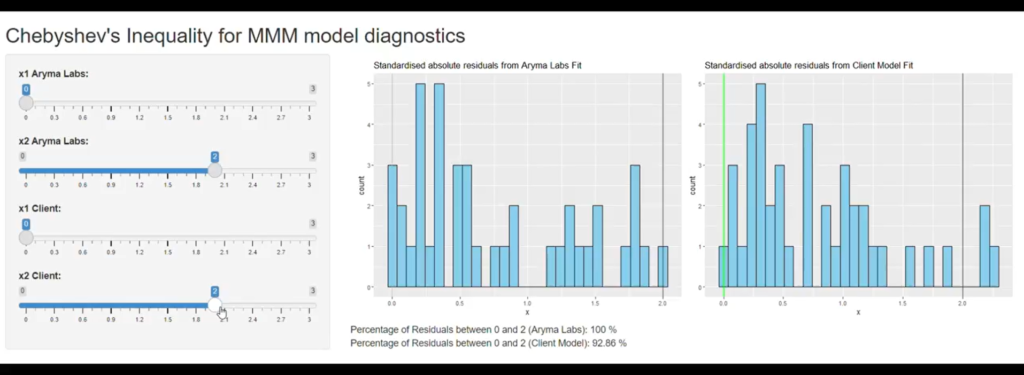

For example, if you had 5 MMM models (fitted for same response variable) and all 5 are overfitted badly, then AIC will choose the least overfitted model among all models.

AIC will not caution that all your MMM models are poorly fitted. In a way AIC is like a supremum of a set.

𝐊𝐋 𝐃𝐢𝐯𝐞𝐫𝐠𝐞𝐧𝐜𝐞 𝐭𝐨 𝐠𝐚𝐮𝐠𝐞 𝐛𝐢𝐚𝐬 𝐢𝐧 𝐌𝐨𝐝𝐞𝐥

Another interesting way we leverage KL Divergence is to gauge bias in the model. For a problem like MMM, bias in model is always unwanted.

The model could have bias for variety of reasons – misspecification of model, treatment of multicollinearity through regularization etc. We are doing some interesting research using KL divergence to reduce bias in our models. (More on this soon).

P.S: Useful link to papers in resources.

First image credit in resources.

Resources:

Why Aryma Labs does not rely on Correlation alone

https://open.substack.com/pub/arymalabs/p/why-aryma-labs-does-not-rely-on-correlation?r=2p7455&utm_campaign=post&utm_medium=web

Facts and fallacies of AIC :

https://robjhyndman.com/hyndsight/aic/

Image credit:

https://www.npr.org/sections/thetwo-way/2013/11/16/245607276/howd-they-do-that-jean-claude-van-dammes-epic-split