A week ago, I talked about epistemic uncertainty in Bayesian framework as a result of uninformative priors. That post drew expected reactions and many of Bayesian loyalists provided only hand wavy refutations.

ICYMI the link to post is in comments.

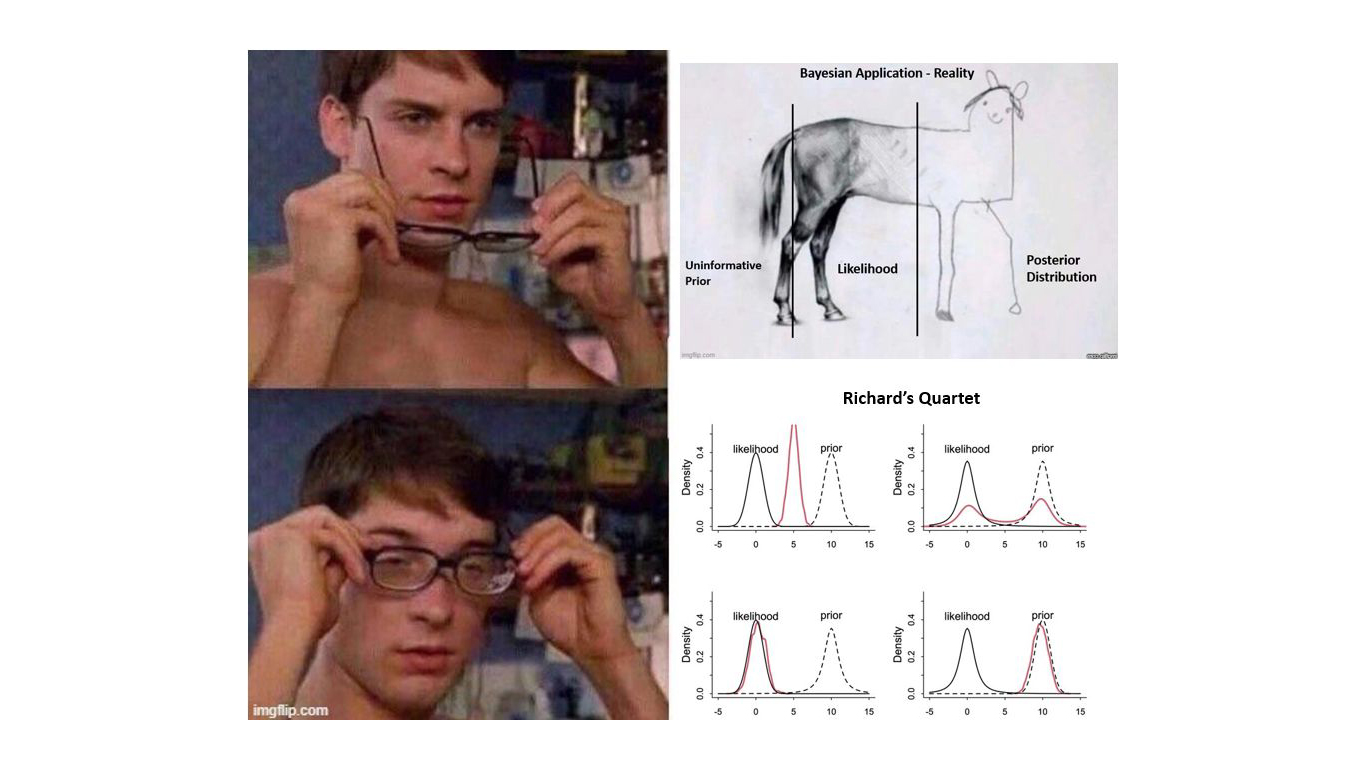

Anyhow, I stumbled upon an interesting tweet from Richard McElreath. I have named it ‘Richard McElreath’s Quartet’ much like the Anscombe’s quartet.

What is this Richard McElreath’s Quartet?

Richard McElreath begins his post by saying “Don’t trust intuition, for even simple prior + likelihood scenarios defy it”.

This point is very fundamental and I will link it back to Bayesian MMM later in the post.

But to stay on point and to build up on what this quartet is all about see below:

The quartet example shows that the choice of prior and likelihood distribution matters a lot. More specifically the ‘tailed-ness’ of the distribution matters more.

The author goes on to give the following 4 options:

1) Normal prior, Normal Likelihood (All if fine here)

2) t-distribution prior, t-distribution likelihood (things start to go awry, we know have bimodal posterior)

3) t-distribution prior, Normal likelihood (the posterior mimics the likelihood and in a way, prior had absolutely no sway).

4) Normal Prior, t-distribution likelihood (The posterior mimics the prior and in a way likelihood had absolutely no sway).

Now what does all this mean?

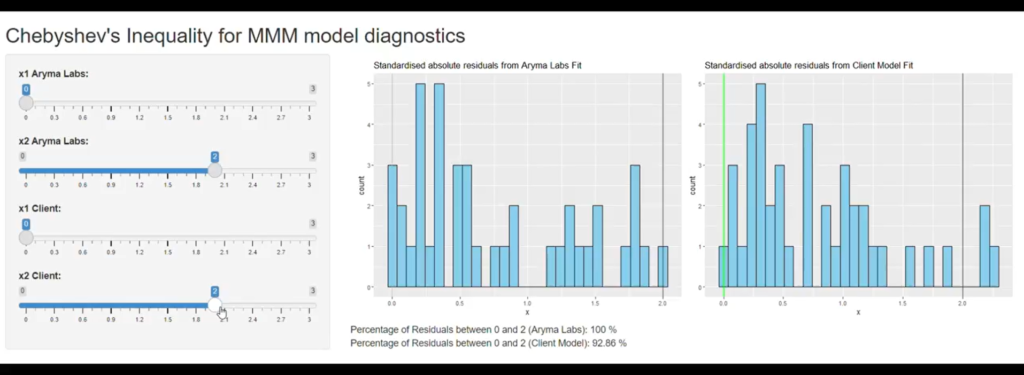

I can speak about Bayesian MMM and perhaps one could generalize it to other situations where people assume Normal distribution is the most common distribution in nature (It is not).

In Bayesian MMM, most people assume and specify Normal distribution for media / marketing variables as prior. The problem is that not only is normal distribution uninformative in many cases, it also does not help much when your likelihood follows a distribution with fat tails (like t distribution), see case 4 above.

My point is not that Bayesian MMM or Bayesian Inference is useless. My point is rather that it requires a lot of hardwork and knowledge to get it right.

An average MMM analyst may not even think about convolutions of probability distributions. But when you are in the Bayesian world, one has to think of the convolutions.

In a nutshell, Bayesian inference or Bayesian MMM is like a sledge on a ice with no guard rails. So many possibilities to veer off !!

To summarize in the Bayesian MMM context “Don’t trust intuition, for even simple prior + likelihood scenarios defy it”.

Talking about fat tail property of t-distribution. It helps in t-SNE (check my post on the same in resources section if interested).

Link to Richard McElreath’s tweet also in resources section.

Resources:

- Epistemic Uncertainty: https://www.linkedin.com/posts/venkat-raman-analytics_datascience-statistics-machinelearning-activity-7105052143824375808-CZF2?utm_source=share&utm_medium=member_desktop

- Richard McElreath’s tweet: https://x.com/rlmcelreath/status/1701165075493470644?s=20

- Why t-distribution in t-SNE: https://www.linkedin.com/posts/venkat-raman-analytics_datascience-datascientists-machinelearning-activity-6927503875306397696-80hj?utm_source=share&utm_medium=member_desktop